Big tech and bad faith

Reckoning with AI

In the first note thinking through the arrival of ChatGPT and the incipient AI arms race, I suggested Shoshana Zuboff’s work on surveillance capitalism as an important reference point. The behaviour of big tech over the last 15 years offers a clear track record of what we might expect, and it does not look promising. An oligopoly in which a small group of companies and people are incredibly powerful and wealthy from delivering services that produce considerable benefits and conveniences, but also cause extensive societal harm and damage, all while resisting regulation and avoiding accountability.

Sam Altman, the head of OpenAI, has announced their expansive aims:

If AGI [Artificial General Intelligence] is successfully created, this technology could help us elevate humanity by increasing abundance, turbocharging the global economy, and aiding in the discovery of new scientific knowledge that changes the limits of possibility.

AGI has the potential to give everyone incredible new capabilities; we can imagine a world where all of us have access to help with almost any cognitive task, providing a great force multiplier for human ingenuity and creativity.

On the other hand, AGI would also come with serious risk of misuse, drastic accidents, and societal disruption. Because the upside of AGI is so great, we do not believe it is possible or desirable for society to stop its development forever; instead, society and the developers of AGI have to figure out how to get it right.

Fortunately for OpenAI, they have determined that what is in their best interests also happens to best for humanity. No alignment problems here. Altman further claims:

We believe that the future of humanity should be determined by humanity, and that it’s important to share information about progress with the public. There should be great scrutiny of all efforts attempting to build AGI and public consultation for major decisions.

Yet turning to OpenAI’s ‘GPT-4 Technical Report’, it has determined to release little information about the model:

Given both the competitive landscape and the safety implications of large-scale models like GPT-4, this report contains no further details about the architecture (including model size), hardware, training compute, dataset construction, training method, or similar.

Put differently, ‘trust us’. All things considered, it would be safer to work on the assumption of bad faith, that certainly has become the default in today’s world.

Nature identifies this lack of transparency as a major concern, and Kate Crawford emphasises this issue:

There is a real problem here. Scientists and researchers like me have no way to know what Bard, GPT4, or Sydney are trained on. Companies refuse to say. This matters, because training data is part of the core foundation on which models are built. Science relies on transparency.

Without knowing how these systems are built, there is no reproducibility. You can't test or develop mitigations, predict harms, or understand when and where they should not be deployed or trusted. The tools are black boxed.

Gary Marcus echoes these sentiments:

Microsoft and OpenAI are rolling out extraordinarily powerful yet unreliable systems with multiple disclosed risks and no clear measure either of their safety or how to constrain them. By excluding the scientific community from any serious insight into the design and function of these models, Microsoft and OpenAI are placing the public in a position in which those two companies alone are in a position do anything about the risks to which they are exposing us all.

There are many problems with the rationales being presented by OpenAI, with the most basic being that incredibly consequential decisions are being made with remarkably limited openness or oversight. As Kelsey Piper judges, ‘this is a ludicrously high-stakes decision for OpenAI to be in a position to make more or less on its own.’ Regardless of how consequential the current and next generation of AI models might be, the societal case for moving at breakneck speed is not strong. Yet there is a basic and powerful economic logic for moving as quickly as possible. This will likely be a game in which a few actors will win big.

Turning to The Economist:

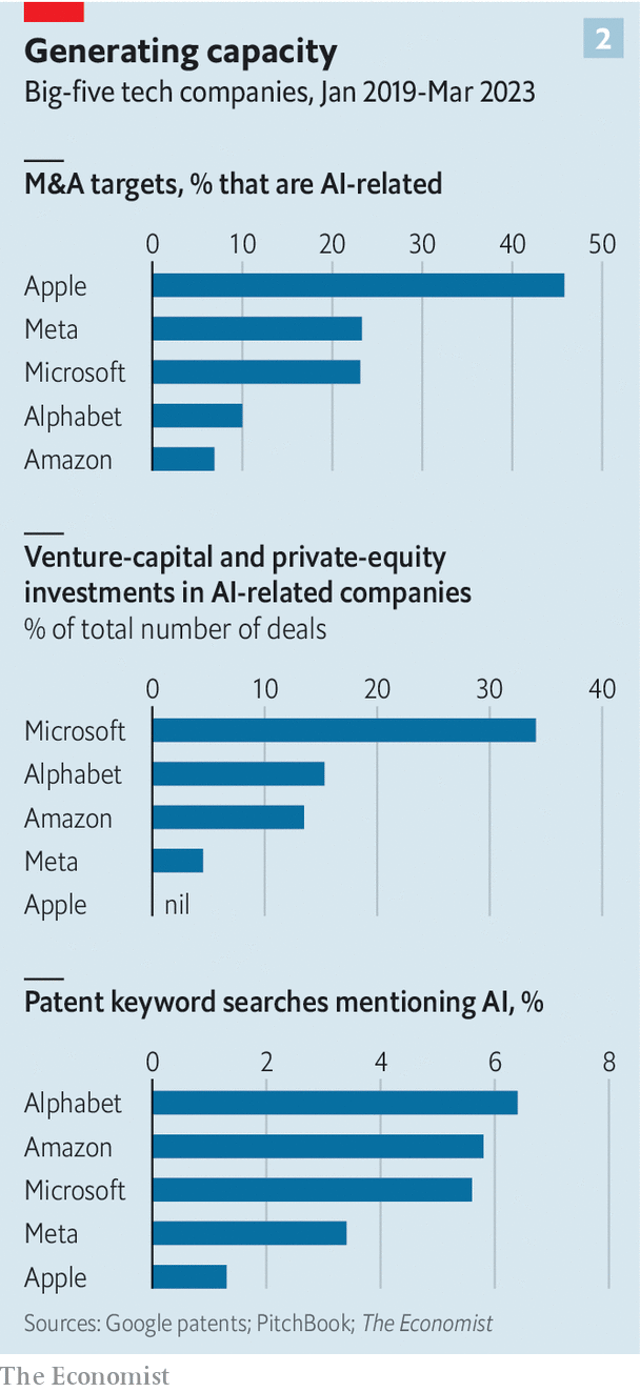

The tech giants have everything they need—data, computing power, billions of users—to thrive in the age of AI, and consolidate their dominance of the industry. But they recall the fate of once-dominant firms, from Kodak to BlackBerry, that missed previous digital platform shifts, only to sink into bankruptcy or irrelevance. So whether or not the AI evangelists are correct, big tech isn’t taking any chances. The result is a deluge of investments.

Given the tendency to compare AI technology with the advent of nuclear weapons, it is important to emphasise an important difference: nuclear was government-led, whereas AI has become overwhelmingly dominated by the private sector.

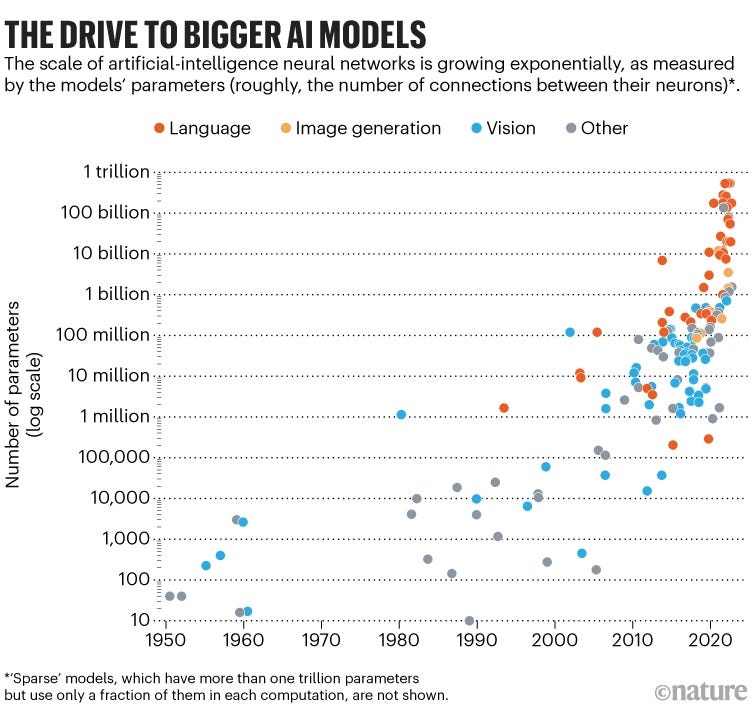

Large language models are expensive to train and run, requiring considerable computing power and data sets, as well as being energy intensive. This continued trend towards bigger models is one that favours big players with more resources.

These recent advances in AI-technology have arrived at a time when many people are already confused and disorientated from the combination of shocks summed up in the notion of polycrisis. Given this, it is unsurprising that there is a tendency to focus on the big metaphysical questions and apocalyptical risks. Without denying the importance of these themes, it is important to keep in the front of our mind that this is more immediately about competition and power. Returning to The Economist:

The mass of resources that big tech is ploughing into the technology reflects a desire to remain not just relevant, but dominant.

Big tech, big money, big stakes. Back to Altman’s big words:

Successfully transitioning to a world with superintelligence is perhaps the most important—and hopeful, and scary—project in human history. Success is far from guaranteed, and the stakes (boundless downside and boundless upside) will hopefully unite all of us.

To conclude, lets turn to Nicola Chiaromonte’s 1970 book, The Paradox of History, which included a chapter entitled, ‘An Age of Bad Faith’:

Today, instead of the cult of ideologies we seem to have adopted a cult of the automobile, television, and machine-made prosperity in general. But this cult is based on a belief fomented by bad faith, the belief that material (industrial, technological, and scientific) advances go hand in hand with spiritual progress; or, to be more precise, that the one cannot be distinguished from the other…

Chiaromonte continued:

Yet it should be obvious that the automatism of the present world does moral man the greatest possible harm. It increases his physical power while increasing his capacity for aimless action, that is to say, his stupidity. At the same time his capacity for good becomes atrophied, since it is generally believed that man’s power over matter and his ability to acquire material possessions solve or cancel all other problems.

It is unclear whether the current wave of AI will be more a case of offering increased capacity for aimless action, or if it portends something more significant. Regardless, it does appear that there is a high likelihood that without any significant countervailing forces, these technological developments will reinforce and deepen the political, economic and societal distortions of surveillance capitalism.